While researching the use of data lakes in a modern cloud data warehouse, I came across several references to a data Lakehouse. What is a Lakehouse? A Lakehouse combines the benefits of both data lakes and a data warehouse into a single cloud-based analytics platform.

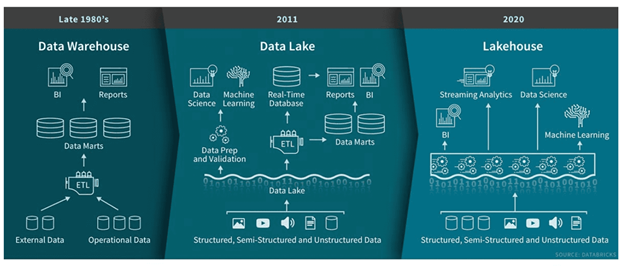

In a traditional data warehouse, extract, transform, and load (ETL) processes are created to ingest data from various source files or systems into a centralized database using schema-on-write architecture. This allows for optimization of the data model and supports easy consumption by business users. However, some of the drawbacks include only supporting structured data, hard to handle streaming data, a potential higher cost from compute and storage being on a single server provisioned for peak usage, and limited advanced analytics support for data science and machine learning.

Figure 1. Evolution of Data Solutions. Image Source from Databricks

Data lakes were introduced to alleviate some of the above problems. Raw data is placed in a low-cost storage system that supports both structured and unstructured data (e.g., video, audio, and text) using schema-on-read architecture. However, this only moves the problem downstream. The lack of data governance, no transaction support, and the inability to have concurrent reads and writes forces companies to develop a two-tiered system. Raw data is stored in the data lake for data science and machine learning, and a separate data warehouse is used for curated reporting and business intelligence. This is not an ideal strategy. Repeatedly moving data between them makes the solution complicated, slow, unreliable, and expensive.

The emergence of the Lakehouse tries to solve the issues from the earlier generations of architecture by combining the best of data lakes and data warehouses. Some of the key features include transaction support, schema enforcement and governance, storage decoupled from compute, open storage formats, and BI directly on the source data.

Delta Lake technology in the Lakehouse creates a transactional metadata layer on top of the data lake. A transaction log with information about the tables is stored alongside the raw files as an open Parquet format. This enables schema enforcement, ACID transaction support and allows for zero-copy cloning.

Atomicity, consistency, isolation, durability (ACID) transactions allow the simultaneous read and write on the same table. Versioning is done using snapshots allowing developers to do audits, rollbacks, and replication of the data. Because Lakehouse is a unified data platform, the ability to manage data governance is simplified by not using multiple tools. Validation of data against a defined schema helps prevent dirty data. These features provide data reliability within the data lake.

An important feature of Lakehouse is business intelligence tools such as Tableau and Power BI can connect directly to the source data in a data lake. This reduces the time it takes to create advanced analytics products and decreases the expense of storing copies of the data in both the data lake and data warehouse.

In this article, we briefly talked about the differences between a data warehouse, a data lake, and a Lakehouse. The Lakehouse is a new architectural approach to solving some of today's problems of analytics and machine learning at a large scale. Although it is still in its infancy, the Lakehouse will continue to evolve and mature. Could it be the future of data warehousing and analytics? I am excited about the technology and encourage you to see how it can help your business.

Back to Blogs

Back to Blogs